Redefining Artificial Intelligence is the new hotness

Page is part of Articles in which you can submit an article

written by owen on 2017-Dec-04.

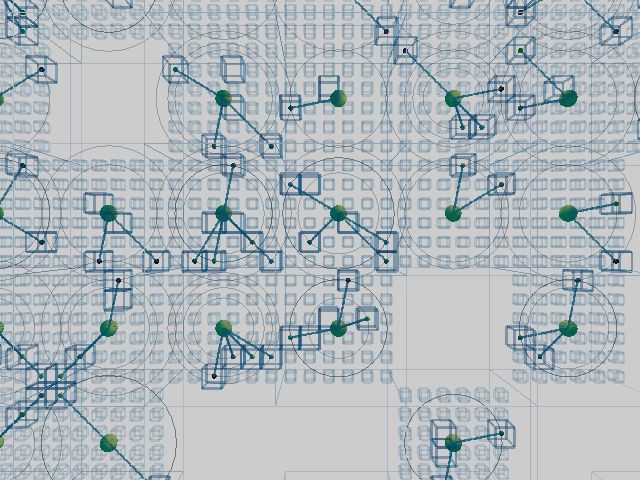

The trend in A.I. 2017 seems to be a move to change the definition of AI rather than ACTUALLY making the AI we want. Instead of AI we are getting side quests. The A.I. game is hot now and everyone wants to be in on it. On one hand you have the common assumptions that AI will take our jobs, make our lives easy or ultimately destroy us all. And on the other hand you have the marketing and video tutorials which are primarily postulating to the lowest denominator. AI has left computers and gone into the "cloud". The cloud a mysterious black box of proprietary software which no one understands but everyone seems to know all too well the instant virtues that this new cloud AI will provide. Having things in the cloud is especially useful for people who like to speculate/talk in presumptions.

The cloud as AI magic sauce

In essence the cloud is just another computer somewhere in a data center. But common people will have you believe that it is some fantastic mysterious thing. In reality whatever you can do in the cloud can be done on any computer given enough time and sample data. Having cloud stuff is useful because its out of reach and therefore unverifiable until it happens. Its not AI, its someone else's stolen data. But of course no one has the time, especially the people praising the potential virtues of A.I. They would rather sit around watch science fiction movies and wait for Netflix, Google, Amazon or whichever big company to sell them A.I. If you "talk about it enough and retweet it will become reality" is the new-age-social-media version of building something in your garage. Social media has become the purview of professional talkers. Visibly large but shallow and without substance upon closer inspection. Not unlike clouds.

Most of these cloud services are actually data collection points that use big data to train software into the appearance of intelligence. What was "Big Data" in the past is now A.I, deep learning, neural nets - it depends on who is doing the branding. With enough data you could pretty much mimic anything and there is alot of data out there that is freely available or better yet you can fool people into giving it all to you by latching the collection system on to some other service on which they are addicted. Something I like to call a sharing black hole - you put data in but nothing comes out. But data by itself is not really A.I. Alexa is not AI. Alexa is not even a sign of how far AI has come. Its a search engine.

What AI used to be

The base goal was to make A.I. that simulates human intelligence but that goal is still a pretty hard and steep hill to climb. Steep hills are what technology is all about. This philosophy is what keeps technology focused on its goal. If you ignore a thing like the Turing test and begin to dilute terms like "Artificial Intelligence" into meaning a mixture of "heuristics" or "rules-based" or "Neural networks", "deep learning" or "Pattern matching", BI or "deep learning" - you also devalue the end goal. Alexa and big data search is all software that we can already do. We just keep putting more and more computing power into doing the same things over and over and calling it by different names. Remember the Office clippy man? Some people today would call that AI as well.

The thirst for new and exciting advancements in technology is so high that pundits will say/write anything to make it more real. Almost as if like they can feel it on the tip of their tongues; next week, next month, next year, 10 years time this hot new thing will be common place like the air we breath! The lower the hanging fruit - the more they talk about it and the better it tastes.

Fake it until you make it

Then again if you look at how technology is evolving in today's smartphone environment you are surrounded by smoke and mirrors. Smartphones are pretending to be full fledged computers while providing less functionality. Social media pretending to be "real-time" even though you are seeing a filtered view of everything that is actually happening. You have cached data all over the place pretending to be real-time. The lag in everything is getting greater and greater as we try to do more and more with limited bandwidth. All this while being totally invisible to a consumer base that is trained to not expect reliability from things that claim to be reliable. Software is eating the world BUT only in situations where it is convenient. Everything is getting hacked. Everything is amazing if you have no clue how any of it works.

The incessant need to feed the hype has given rise to a league of retweeters and likers who will say and twist anything to the point at which no one knows what they are talking about. At deeper inspection few things hold up. AI refrigerators, AI televisions, AI image stabilization, AI assistants are all deviations of the same thing. People criticizing it either have limited imaginations or will slow the train that is gonna bring us nice things, a virtual kick to someone's favorite puppy.

Conclusion

We might get the breakthrough tommorow or later tonight. But get good and stop making things up based on hype. If something is doing what it says its doing then that is good. But do not change the goal posts or redefine old concepts to match new toys simply to blow hot air under it in the hopes that it will float. The bullshit tide is high. Do it for the love, not for the likes. Do not settle for less than you expect. Do some research. Elevate yourself from being a spectator. One needs to be passionate in life. Mediocrity is a waste of everyone's time. Go deep or go home.

permanent link. Find similar posts in Articles.

comments

Comment list is empty. You should totally be the first to Post your comments on this article.